The traditional image of robotics, characterized by large, caged arms performing repetitive, precise welds on an automotive assembly line, is a relic of a past era. This was the age of automated robots—powerful, dumb, and blind. The future belongs to autonomous robots, a new generation of machines powered by artificial intelligence that can see, learn, reason, and adapt to complex, unstructured environments. This paradigm shift, fueled by advancements in AI, computer vision, and machine learning, is moving robotics out of the highly controlled factory floor and into the dynamic chaos of everyday life, transforming industries from logistics and agriculture to healthcare and domestic services.

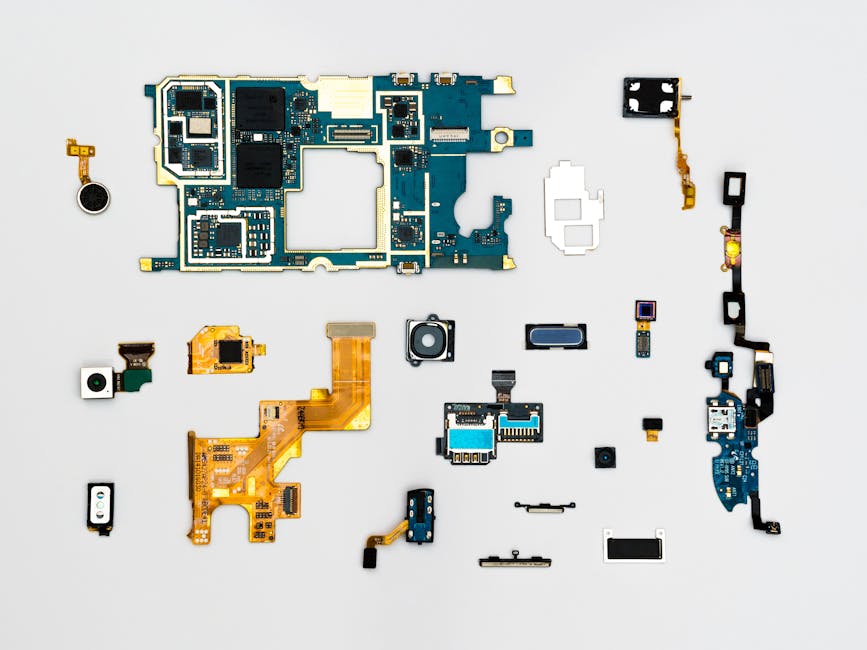

The core of this transformation lies in the transition from deterministic programming to probabilistic learning. Traditional industrial robots operate on pre-programmed code that dictates every movement with micrometer precision. They excel in environments where everything is predictable, but they fail utterly when faced with the unexpected. AI shatters this limitation. Through techniques like deep learning and reinforcement learning, robots are no longer explicitly programmed for every task; instead, they learn from vast datasets of examples or through trial and error in simulated environments. A robot arm tasked with bin picking—identifying and retrieving a specific part from a jumbled container—can now be trained on millions of images of that part under different lighting conditions, angles, and occlusions. Its AI-driven vision system doesn’t just see pixels; it understands context, infers depth, and calculates the optimal grasp point for a successful pickup, adapting in real-time to the chaotic arrangement of objects.

This capability is revolutionizing logistics and supply chain management. E-commerce warehouses, once reliant on human workers navigating vast aisles, are now deploying fleets of autonomous mobile robots (AMRs). These AMRs are guided by sophisticated AI algorithms that process real-time data from onboard cameras, lidar, and inertial sensors. Their AI brains perform simultaneous localization and mapping (SLAM), allowing them to navigate dynamically around human coworkers, fallen obstacles, and other robots. They are not following a pre-set wire in the floor; they are intelligently plotting the most efficient path to their destination. This extends to robotic sorting arms that can handle thousands of different products, their AI vision systems instantly identifying items and learning the most effective way to grip everything from a soft stuffed animal to a rigid book, dramatically accelerating order fulfillment.

Beyond navigation and manipulation, AI is enabling a breakthrough in human-robot collaboration. The concept of cobots, or collaborative robots, is maturing from a simple, safety-monitored assistant to a true intelligent partner. Advanced AI models allow these robots to understand human intention through gesture recognition, natural language processing, and even predictive analytics. In a manufacturing setting, a human worker might perform a complex assembly task. A vision-based AI system can observe this process, learn the steps, and then assist by anticipating the next needed component or tool, handing it to the worker without a explicit command. This symbiotic relationship leverages human dexterity and problem-solving skills with robotic precision, strength, and endurance, creating hybrid workcells that are far more flexible and efficient than purely manual or purely automated ones.

The agricultural sector is undergoing a similar AI-driven robotic revolution. Agribots equipped with multispectral cameras and AI-powered image recognition can traverse fields, identifying individual crops. They can distinguish between a strawberry and a weed with superhuman accuracy, enabling micro-targeted herbicide application that reduces chemical usage by over 90%. These robots can analyze the color, shape, and size of fruits to determine optimal harvest time, using delicate manipulators to pick only the ripe produce, thereby reducing waste and labor costs. This shift towards precision agriculture, powered by autonomous, data-collecting robots, is critical for sustainable food production to support a growing global population.

Perhaps the most profound impact is emerging in healthcare. Robotic surgery has been established for years, but AI is elevating it to new levels of precision and safety. AI algorithms can overlay pre-operative scans (like MRI or CT) onto the live video feed of the surgical site, providing surgeons with enhanced reality guidance that highlights critical structures like nerves and blood vessels. More autonomously, AI-driven robotic systems can now assist in suturing, where the robot learns the optimal tension and stitch placement from expert surgeons, executing repetitive aspects of the procedure with unwavering consistency. In rehabilitation, exoskeletons and robotic prosthetics are using AI to adapt to the user’s gait and intention, providing natural movement and real-time support that was previously science fiction.

The development of these intelligent machines is accelerated by simulation and digital twin technology. Training AI models in the real world is slow, expensive, and potentially dangerous. Instead, developers create highly realistic virtual environments—digital twins of factories, warehouses, or even city streets. Millions of virtual robots can train in parallel within these simulations, encountering countless edge cases and scenarios that would take decades to replicate physically. They learn to navigate around virtual people, handle virtual package deformations, and respond to virtual equipment failures. This simulation-to-reality (Sim2Real) transfer, a complex AI challenge in itself, allows robots to acquire vast experience before ever being deployed, drastically reducing development time and ensuring robust, well-tested AI behaviors.

However, the path forward is not without significant challenges. The increased complexity of AI-driven systems introduces new layers of ethical and safety concerns. The “black box” nature of some deep learning models makes it difficult to understand why a robot made a specific decision, a critical issue for accountability in the event of an accident. Ensuring the security of these connected, intelligent systems is paramount, as vulnerabilities could be exploited with dangerous consequences. Furthermore, the societal impact, including workforce displacement and the economic inequality it might exacerbate, requires proactive management through policy and re-skilling initiatives. The future will demand a framework for certifying AI safety and establishing clear legal and ethical guidelines for autonomous robotic action.

The hardware itself is evolving to keep pace with AI’s software demands. The rise of neuromorphic computing, which mimics the architecture of the human brain, promises to process sensory data and make decisions with far greater energy efficiency than traditional CPUs and GPUs. This is essential for mobile robots that must operate for long periods on battery power. Similarly, advancements in soft robotics, creating compliant and adaptable limbs from malleable materials, are being combined with AI to create machines that can safely interact with fragile objects and work seamlessly alongside humans. The integration of AI is pushing the boundaries of what is mechanically possible, leading to new form factors and capabilities.

Looking at the immediate horizon, large language models (LLMs) and foundation models are set to become the next transformative layer. Instead of training a robot for a single specific task, researchers are developing general-purpose robotic models. A robot could be given a high-level, natural language command like “tidy up this room” or “assemble this furniture based on the instructions.” The robot’s AI system, built on a foundation model trained on vast text, image, and video data, would break down the command into a sequence of logical steps, understand the goal-state of a “tidy” room, and manipulate objects accordingly. This moves robotics from single-task automation to general-purpose capability, a fundamental leap towards truly versatile machines.

The convergence of AI, robotics, and the Internet of Things (IoT) is creating a seamlessly connected ecosystem of intelligence. An autonomous robot in a smart factory does not operate in isolation. It receives real-time data from sensors on production equipment, anticipating maintenance needs. It communicates with the factory’s central planning system to adjust its tasks based on shifting orders. It can call an elevator using an API to move between floors. This ecosystem approach turns the robot from an isolated island of automation into a node in a vast, intelligent network, optimizing processes at a systemic level far beyond its immediate physical task.

In field services, robots are taking on dangerous and undesirable jobs. AI-powered drones are inspecting critical infrastructure like wind turbines, bridges, and power lines, using computer vision to identify cracks, corrosion, or other defects with more consistency and detail than the human eye. They autonomously navigate around structures, capturing data from every angle without putting a human inspector at risk. Similarly, robots are deployed for disaster response, navigating rubble to search for survivors, and in nuclear facilities, handling hazardous materials while being operated remotely by humans who are kept at a safe distance.